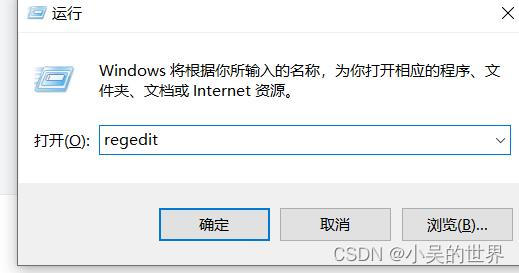

TL DR :torchvision's Resize behaves differently if the input is a PIL.Image or a torch tensor from read_image. The solution was not to use the new Tensor API and just use PIL as the image reader. This transform can accept or Tensors, in short, the resizing does not produce the same image, one is way softer than the other. Initially I thought that it was fastai's fault, but all the problem came from the new interaction between the tochvision.io.images.read_image and the. background.resize () instead of Image.resize (background.size), resample0) Link to an example in the docs. So, I have to make the reading and preprocessing of images as close as possible as fastai Transform pipeline, to get accurate model outputs.Īfter converting the transforms to ansforms I noticed that my model performance dropped significantly. The resize () method is an instance method not a class method.

Pil image resize install#

(I am waiting to finally be able to install only the fastai vision part, without the NLP dependencies, this is coming soon, probably in fastai 2.3, at least it is in Jeremy's roadmap). In our deployment env we are not including fastai as requirements and rely only on pure pytorch to process the data and make the inference. It is a simple image classifier trained with fastai.

Pil image resize code#

Padded_image = np.zeros(shape_out, dtype=np.Yesterday I was refactoring some code to put on our production code base. If not DO_PADDING or np.all(hw_out = hw_wk): Image, tuple(hw_wk), interpolation=cv2.INTER_NEAREST Hw_wk = (hw_image * resize_ratio + TINY_FLOAT).astype(int) Hw_out, hw_image = ) for x in (shape_out, image.shape)] If image.ndim = 3 and len(shape_out) = 2: It should work with either black and white image or color image def resize_with_padding(image, shape_out, DO_PADDING=True, TINY_FLOAT=1e-5): # Add the resized image to the padded image, with padding on the left and right sides # Calculate the number of rows/columns to add as padding # Create a black image with the target size Resized_image = cv2.resize(image, (new_width, new_height), interpolation = cv2.INTER_NEAREST) New_height = int(new_width * aspect_ratio) But Dataloader could only support PIL Image as its input. You should use ksize (7, 7) to achieve the same result. In Pillow, you set radius, while in cv you set kernel size, which is literally diameter. New_width = int(new_height / aspect_ratio) One major difference: The output of operations is completely different. # Calculate the new height and width after resizing to (224,224) # Calculate the aspect ratio of the image Resizes a black and white image to the specified size,Īdding padding to preserve the aspect ratio. It's the best what i could do, works only with black and white images def resize_with_padding(image, size=(224,224)): Img.thumbnail((expected_size, expected_size))ĭelta_width = expected_size - img.sizeĭelta_height = expected_size - img.size Padding = (pad_width, pad_height, delta_width - pad_width, delta_height - pad_height)ĭef resize_with_padding(img, expected_size): Try to use this function: from PIL import Image, ImageOpsĭelta_width = desired_size - img.sizeĭelta_height = desired_size - img.size Result[y_center:y_center+old_image_height, # copy img image into center of result image Y_center = (new_image_height - old_image_height) // 2 X_center = (new_image_width - old_image_width) // 2 Result = np.full((new_image_height,new_image_width, channels), color, dtype=np.uint8) # create new image of desired size and color (blue) for padding Old_image_height, old_image_width, channels = img.shape I think this is easier to do using width, height, xoffset, yoffset, rather than how much to pad on each side. Here I compute the offset to do center padding.

It uses Numpy slicing to copy the input image into a new image of the desired output size and a given offset. Here is another way to do that in Python/OpenCV/Numpy.

0 kommentar(er)

0 kommentar(er)